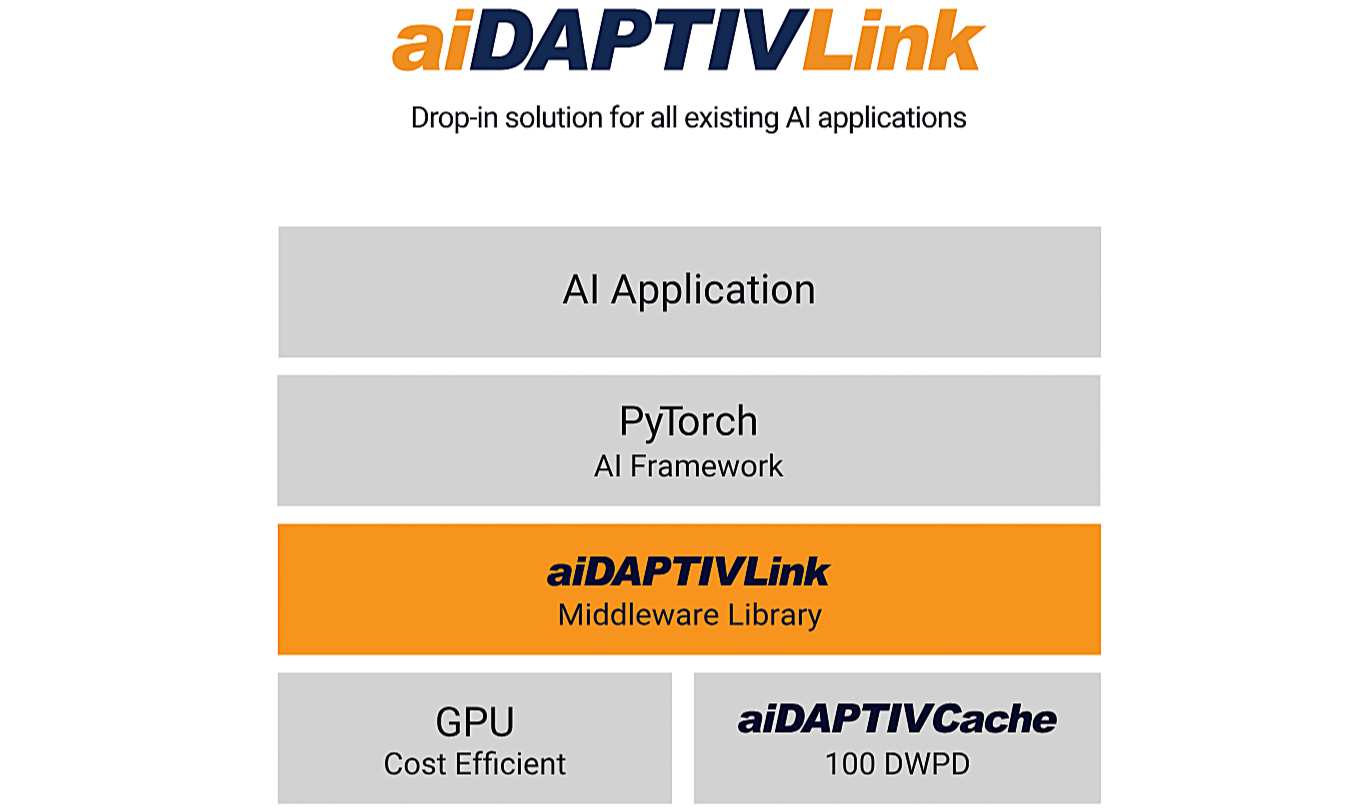

BENEFITS

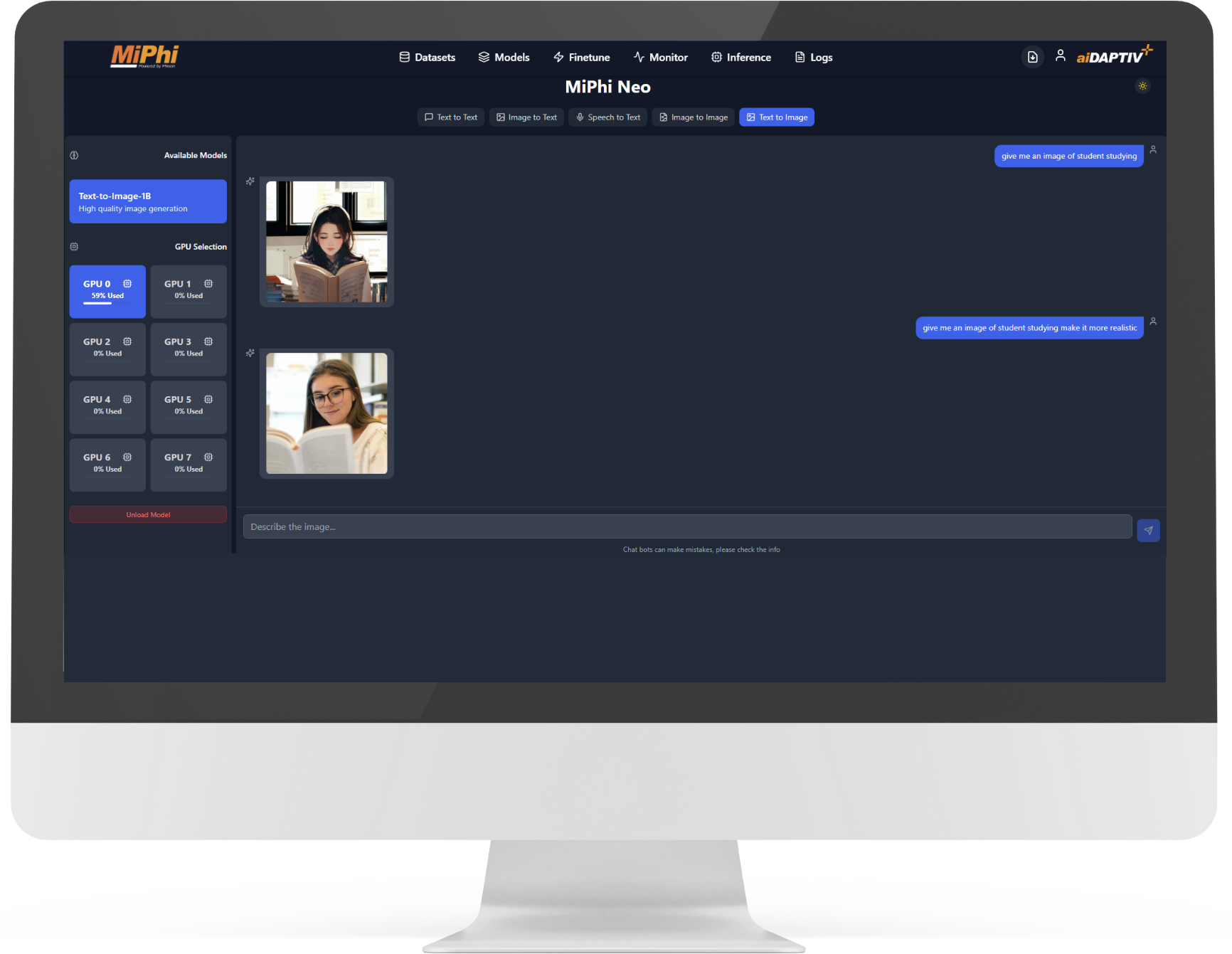

- Transparent drop-in

- No need to change your AI Application

- Reuse existing HW or add nodes

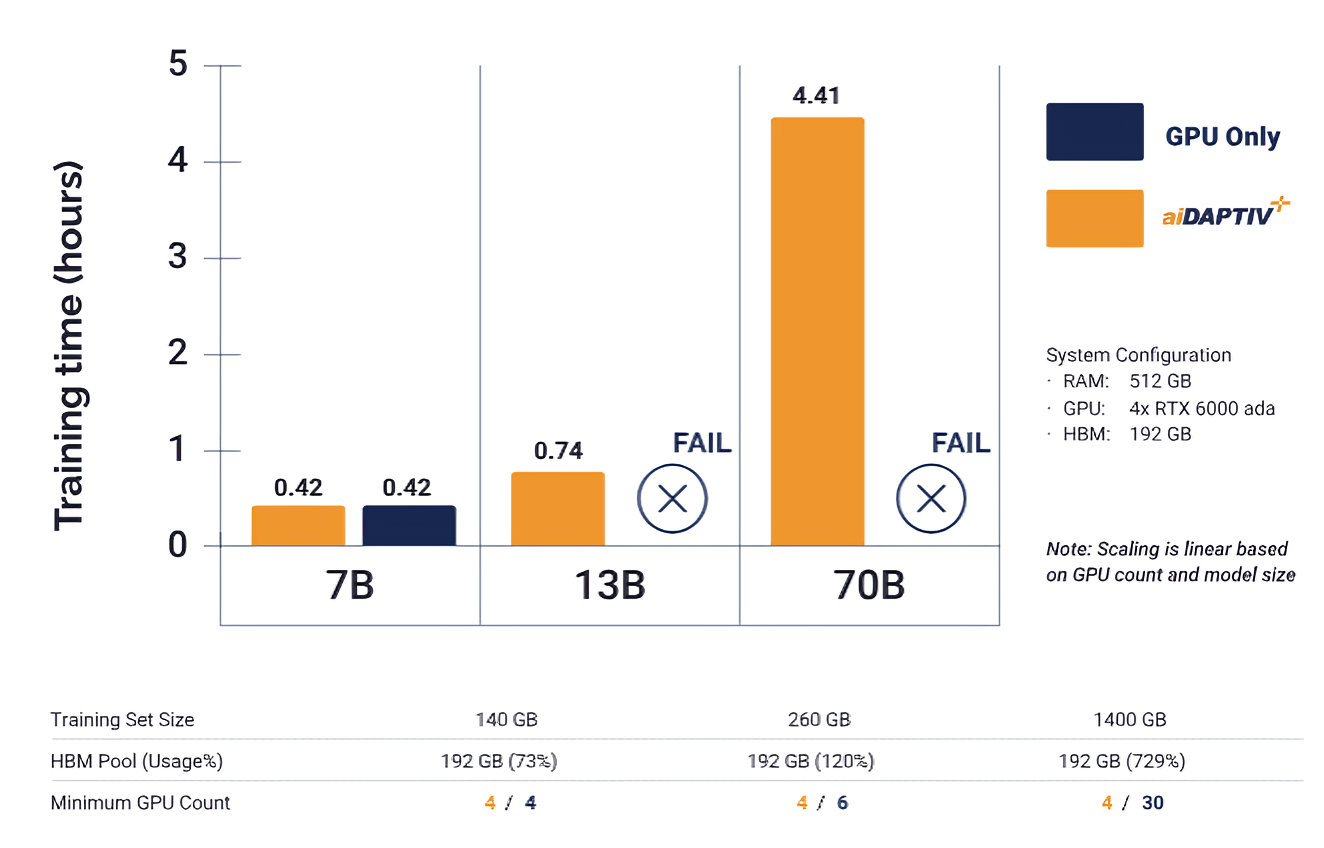

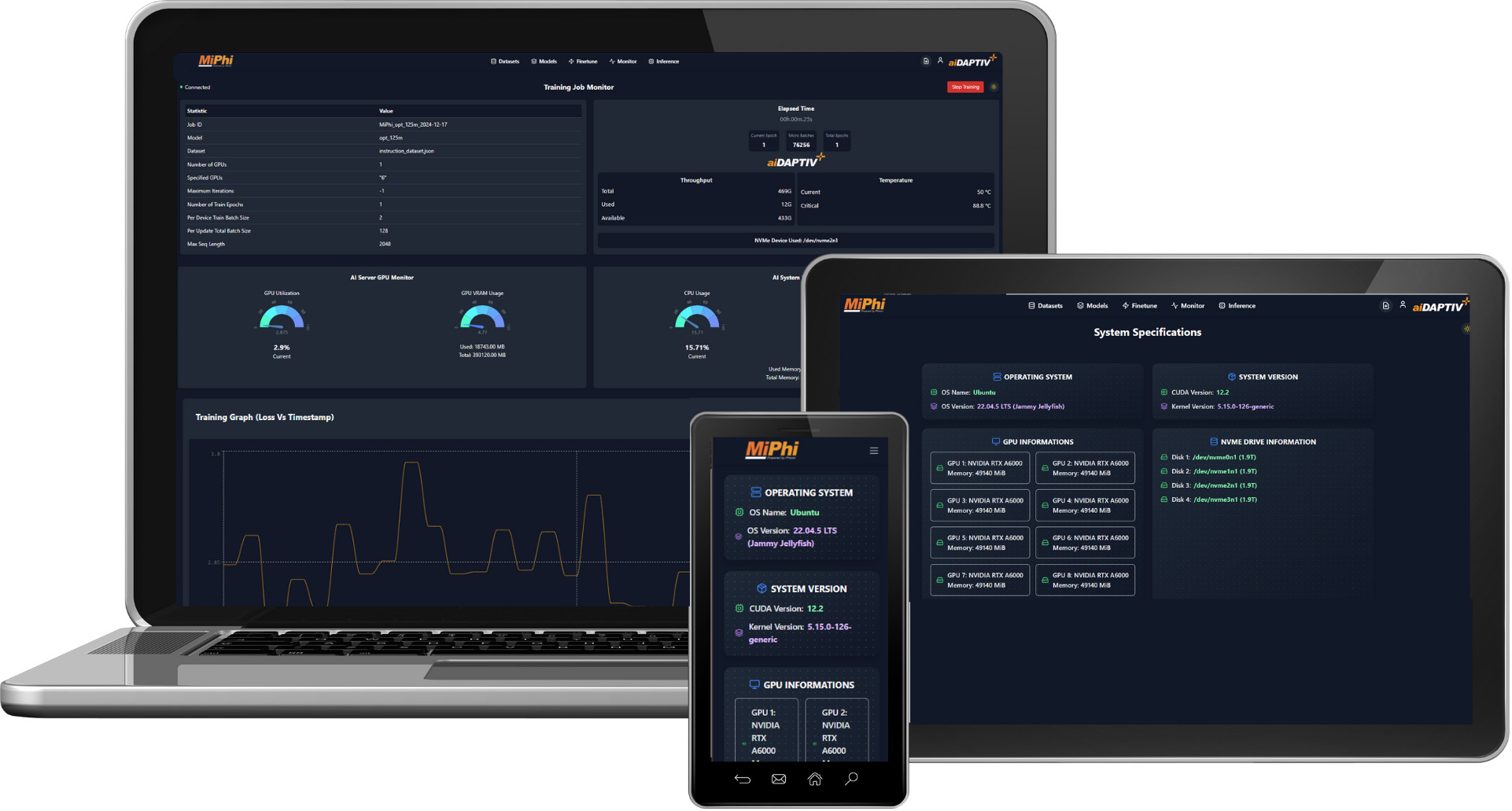

aiDAPTIV+ MIDDLEWARE

- Slice model, assign to each GPU

- Hold pending slices on aiDAPTIVCache

- Swap pending slices w/ finished slices on GPU

SYSTEM INTEGRATORS

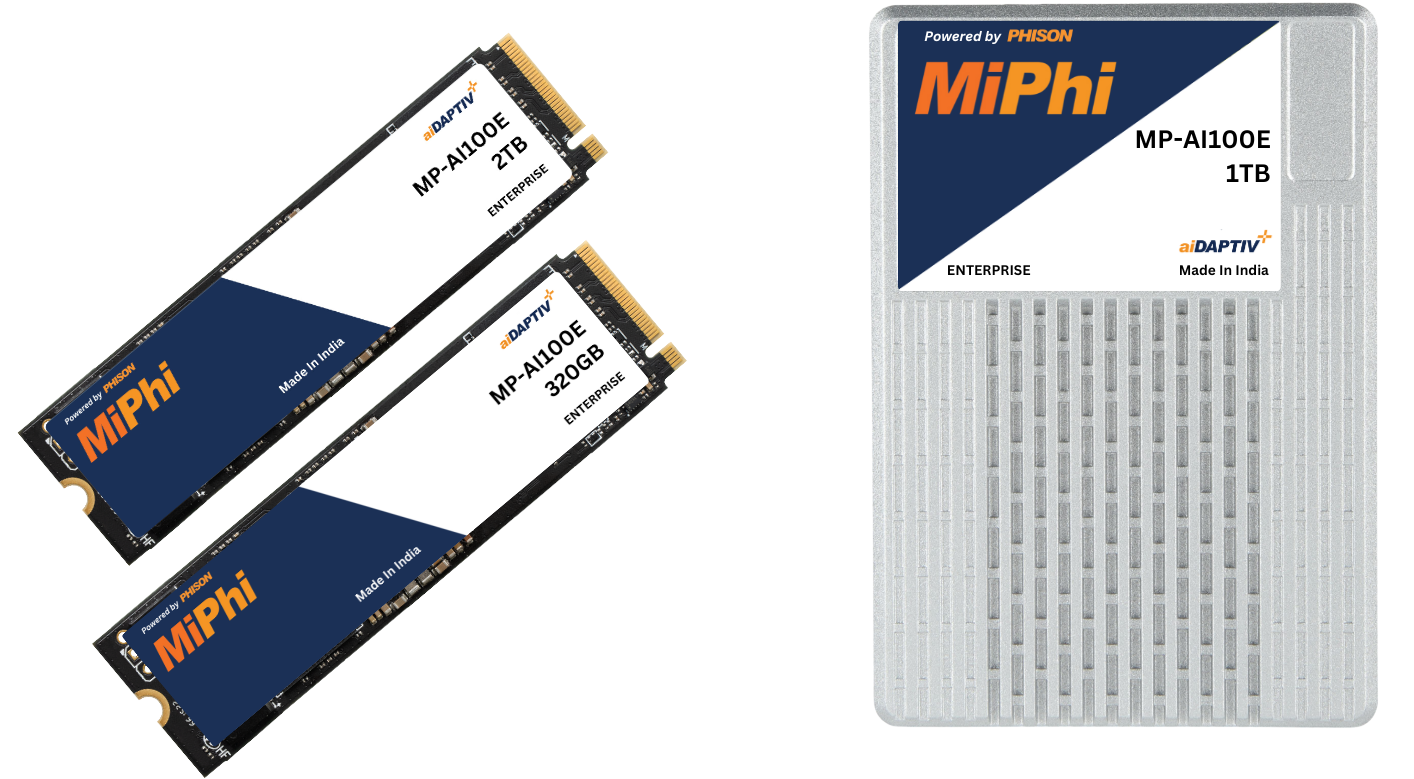

- Access to ai100E SSD

- Middleware library license

- Full MiPhi support in system bring up